Book Your API Security Demo Now

What is Container Orchestration?

In today’s fast-paced digital world, software development and deployment have evolved beyond traditional methods. Applications are no longer built as single, monolithic blocks. Instead, they are broken down into smaller, manageable components called containers. These containers are lightweight, portable, and can run consistently across different environments. But as the number of containers grows, managing them becomes increasingly complex. This is where container orchestration steps in.

Container orchestration is the automated arrangement, coordination, and management of containers. It ensures that containers are deployed in the right place, at the right time, and with the right resources. It also handles scaling, networking, load balancing, and health monitoring. Without orchestration, managing containers at scale would be chaotic and error-prone.

Why Container Orchestration Matters

Containers are excellent for packaging applications and their dependencies into a single unit. However, running a few containers manually is manageable; running hundreds or thousands is not. Imagine a large-scale application with microservices architecture. Each service might run in multiple containers across different servers. If one container fails, another must replace it instantly. If traffic spikes, more containers must be spun up to handle the load. All of this must happen automatically and reliably.

Container orchestration platforms solve these problems by:

- Automating deployment and scaling of containers

- Managing container lifecycles

- Monitoring container health and restarting failed containers

- Distributing workloads across available infrastructure

- Managing service discovery and networking between containers

- Enforcing security policies and access controls

These capabilities are essential for modern DevOps practices and continuous delivery pipelines.

Core Features of Container Orchestration

To understand container orchestration better, let’s break down its core functionalities:

These features make orchestration platforms indispensable for managing containerized applications in production environments.

How Container Orchestration Works

At its core, container orchestration involves a control plane and a set of worker nodes. The control plane is responsible for making global decisions about the cluster (like scheduling), while the worker nodes run the actual container workloads.

Here’s a simplified workflow of how orchestration works:

- Define Desired State: You write a configuration file (usually in YAML or JSON) that describes how your application should run—how many replicas, what image to use, what ports to expose, etc.

- Submit to Orchestrator: This configuration is submitted to the orchestration platform.

- Scheduler Assigns Work: The scheduler decides which nodes should run the containers based on available resources.

- Containers Are Deployed: The orchestrator pulls the container images and starts them on the selected nodes.

- Health Checks and Monitoring: The orchestrator continuously monitors the containers. If one fails, it is restarted or replaced.

- Scaling and Updates: If traffic increases, the orchestrator can spin up more containers. If you update your app, the orchestrator can perform rolling updates with zero downtime.

This process ensures that your application is always running in the desired state, even in the face of failures or changes.

Popular Container Orchestration Tools

Several tools are available for container orchestration, each with its own strengths and use cases. The most widely used are:

Among these, Kubernetes has emerged as the industry standard due to its flexibility, scalability, and strong community support. However, each tool has its own niche and may be better suited for specific scenarios.

Container Orchestration vs. Traditional Deployment

To appreciate the value of container orchestration, it helps to compare it with traditional deployment methods:

This comparison highlights why container orchestration is a game-changer for modern software delivery.

YAML Configuration Example

Here’s a basic example of a Kubernetes deployment configuration in YAML:

This file tells the orchestrator to run three replicas of a container using the specified image and expose port 80. The orchestrator takes care of the rest—scheduling, monitoring, and scaling.

Benefits of Using Container Orchestration

Using a container orchestration platform brings several benefits:

- High Availability: Applications remain available even if some containers or nodes fail.

- Efficient Resource Use: Workloads are distributed to make the best use of available hardware.

- Faster Time to Market: Developers can deploy updates quickly and reliably.

- Improved Security: Policies can be enforced at the container level.

- Simplified Operations: Complex tasks like scaling and rolling updates are automated.

These advantages make orchestration essential for businesses aiming to deliver software at scale.

Challenges of Container Orchestration

Despite its benefits, container orchestration is not without challenges:

- Steep Learning Curve: Tools like Kubernetes have complex architectures and require expertise.

- Operational Overhead: Managing clusters and configurations can be time-consuming.

- Security Risks: Misconfigurations can expose containers to attacks.

- Tooling Complexity: Integrating orchestration with CI/CD, monitoring, and logging tools requires careful planning.

Organizations must weigh these challenges against the benefits to determine if orchestration is the right fit.

Real-World Applications

Container orchestration is used across industries for various purposes:

- E-commerce: Handle traffic spikes during sales events by auto-scaling services.

- Finance: Run secure, compliant microservices for banking applications.

- Healthcare: Deploy and manage sensitive applications with strict uptime requirements.

- Media: Stream video content with scalable backend services.

- Gaming: Manage multiplayer game servers that need to scale dynamically.

These use cases show how orchestration enables innovation and resilience in mission-critical systems.

Summary Table: What Container Orchestration Solves

This table summarizes the practical problems that container orchestration addresses, making it a cornerstone of modern infrastructure.

Final Thoughts on the Mechanics

Understanding the mechanics of container orchestration is crucial for anyone involved in software development, operations, or security. It’s not just about running containers—it’s about running them efficiently, securely, and at scale. Whether you’re deploying a simple web app or a complex microservices architecture, orchestration provides the tools to manage it all with confidence and control.

What is Kubernetes?

Kubernetes Core Mechanics

Kubernetes structures its operations around several foundational components that collaborate to manage containerized environments efficiently.

Cluster Structure

A functioning Kubernetes system is made up of two distinct node types:

- Control Plane (Master Node): Directs the entire cluster, making decisions like scheduling containers and responding to system events.

- Worker Nodes: Execute the deployed application containers and handle the actual workload processing.

Each node includes critical subsystems:

Pod Fundamentals

Pods encapsulate one or more tightly coupled containers, sharing networking and volumes. They act as the atomic unit of deployment. Since they are short-lived, Kubernetes automatically replaces failed or terminated Pods to maintain reliability.

Deployment Strategies

Deployments manage the desired state of application Pods. By defining how many duplicated Pods should run, they monitor and maintain this number, replacing or increasing instances as necessary. Rollouts occur in stages, and faulty updates can be reverted rapidly.

Service Abstractions

Services map workload Pods to network endpoints, enabling reachable, stable communication regardless of Pod lifecycle. Internally, Kubernetes assigns individual Services virtual DNS records. These support internal service discovery or exposure via node ports, ingress controllers, or external load balancers depending on configuration.

Namespace Utilization

Namespaces create isolated environments within a shared Kubernetes cluster, accommodating multi-team or multi-project usage without sacrificing organization or security. Resources like Deployments, Services, and ConfigMaps can be logically grouped under unique namespaces.

Automation and Application Lifecycle Control

Kubernetes introduces resilience and responsiveness via automation mechanisms built into the platform.

Intelligent Container Scheduling

Scheduling engines scan worker node resource allocations in real time, evaluating metrics like memory and CPU to find the best node for new workloads. Placement decisions can also include tolerations, affinities, taints, and constraints.

Fault Recovery

Dead or non-responsive Pods are automatically terminated and replaced. Node-level faults trigger a redistribution of their workloads, ensuring that service availability persists even during partial infrastructure failures.

Automatic Scaling

Replicas can be dynamically increased or decreased. Scaling can occur manually or via metrics-based triggers like CPU usage, allowing infrastructure to adapt to user demand.

Seamless Updates

Deployments utilize incremental rollout strategies to deploy new application versions without downtime. Failed updates can be reverted with built-in rollback capabilities, improving development agility and minimizing disruptions.

Secrets and Configuration Separation

Kubernetes stores sensitive data like passwords and tokens securely through encrypted objects called Secrets. Likewise, ConfigMaps inject configuration values into containers without modifying images themselves.

Load Distribution and Service Access

Services internally route traffic across multiple Pod replicas using round-robin logic. Naming conventions and the internal DNS resolver allow containers to communicate using service names instead of IPs. Kubernetes supports external access through NodePorts, Ingress resources, or cloud-integrated LoadBalancer endpoints.

Persistent Storage Integration

Workloads can bind to persistent volumes regardless of their runtime node. Kubernetes provisions and attaches underlying storage layers (block, file, or object) across clouds or on-prem infrastructure using a plugin or CSI driver interface.

Network Architecture

Each Pod receives an exclusive IP from the cluster’s internal network. This flat design permits direct Pod-to-Pod communication across nodes without port forwarding or address translation.

Service Exposure Methods

Protection Layers and Permissions

Security controls encompass user-level access, inter-Pod traffic, system-wide configurations, and encrypted storage.

RBAC System

Role-Based Access Control governs what actions users or service accounts can take on specific resources. Permissions can be scoped at namespace or cluster levels.

Traffic Segmentation

NetworkPolicies dictate allowable traffic routes to or from selected Pods. Rules are crafted based on labels and enforcement direction (ingress or egress).

Secret Isolation

Secrets are stored inside etcd in a base64-encoded format, ideally with encryption at rest enabled. Access is further limited via RBAC and volume mount restrictions.

Pod Admission Controls

Pod Security Admission (PSA), replacing older PodSecurityPolicies, evaluates and accepts or rejects Pods based on security context fields like privilege escalation, host networking usage, and runAsUser settings.

Ecosystem Components and Plug-ins

Kubernetes leverages a modular design that accommodates extensions and integrations.

- Helm Charts automate application deployment using template-based manifests.

- Prometheus & Grafana collect metrics and provide visual dashboards.

- Istio imposes control over service interactions via service meshes and policies.

- kubectl performs direct API communication through commands and scripts.

- Kustomize enables manifest customization with overlays and patches.

Manifest Sample: Application Deployment

This file instructs Kubernetes to spin up three stateless web server Pods running Nginx. Pods share the same label selector and are created from a common specification.

Hybrid and Multi-Cloud Operation

Kubernetes adapts to diversified infrastructure — bare metal, private data centers, or public clouds — with compatible setups across vendors.

Managed Kubernetes Variants

Providers abstract away control plane maintenance, upgrades, and monitoring, allowing teams to focus on workloads.

System Overhead and Operational Drawbacks

Though scalable and robust, Kubernetes introduces certain trade-offs.

- Steep Onboarding: Teams unfamiliar with distributed systems or DevOps practices may struggle initially.

- Complex Management: Monitoring resources in large, multi-tenant clusters can be intricate.

- Security Loopholes: Poorly scoped permissions or misconfigured ports lead to potential vulnerabilities.

- Resource Costs: Required allocations for system components like

kube-proxy,kubelet, and control plane services increase the minimum hardware footprint.

Resource Regulation

Containers claim and restrict compute resources by specifying CPU and memory thresholds.

Requests define what’s reserved. Limits act as hard boundaries. This balance ensures fairness across applications and avoids noisy neighbor effects.

Observability and Metrics

Logs and telemetry are exported using fluent log forwarding agents and metric exporters.

- Fluentd, Logstash, and Filebeat forward logs to aggregators.

- Elasticsearch provides queryable log indexing.

- Kibana analyzes searching trends and incidents.

- Prometheus scrapes node and application metrics on a schedule.

- Grafana presents real-time dashboards backed by Prometheus or Loki.

Custom Objects and API Integration

The Kubernetes control plane is built on exposed RESTful APIs.

Custom Resources

Groups can define tailored APIs through CustomResourceDefinitions, making it possible to represent domain-specific entities.

Operators and controllers read these custom objects, apply business-specific logic, and manipulate Kubernetes-native resources in response.

What is Red Hat OpenShift?

OpenShift operates as a comprehensive application platform built on Kubernetes, but customized for enterprise-scale needs. It enhances container orchestration with tools that enable streamlined development, secure operations, and automated lifecycle management within tightly controlled environments. Unlike basic Kubernetes distributions, OpenShift brings together critical components that simplify the delivery and maintenance of software in modern, scalable infrastructure.

Enhanced Architecture Leveraging Kubernetes

Kubernetes forms the core control layer, orchestrating workloads across clusters. OpenShift expands this foundation by embedding infrastructure services, security guardrails, and curated workflows that eliminate the friction associated with running distributed applications in production.

- Developer Utility Layer:

oc, a specialized CLI, interfaces with the platform and includes functionality beyondkubectl. Additional tools such as the web console and build automation via Source-to-Image (S2I) allow rapid deployment from code repositories without writing Dockerfiles. - Hardened Security Controls: Default constraints ensure containers do not execute with root privileges. Authentication systems like LDAP and OAuth are natively integrated into identity management workflows. Policies restrict resource access and define execution boundaries per workload.

- CI/CD Tooling Ecosystem: Pre-integrated Jenkins pipelines offer legacy support, while Tekton support caters to Kubernetes-native CI/CD strategies.

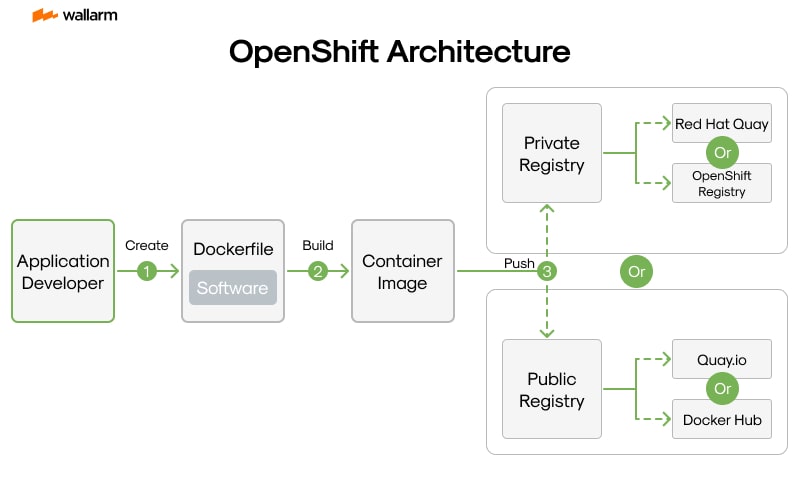

- Secure Image Workflows: An internal container registry, image stream objects, and policies for signature validation streamline software integrity checks and automated deployment updates.

- Tenant Isolation: Namespaces are extended via "projects," enabling segmentation of resources and user permissions across teams or business units.

Composition of the platform includes:

- Control Plane Nodes: API gateway, job controller, and scheduler components handle orchestration requests and workload distribution.

- Compute Nodes: Application pods reside on worker nodes, with resource limits and quotas enforced at the project level.

- State Engine: Etcd persists state data and configuration used across the cluster.

- Traffic Pipeline: An HAProxy-based router operates as an ingress gateway, distributing client requests to internal services or pods.

- Build & Storage Node Services: Internal image registry handles push/pull storage via a built-in distribution service. Optional scalable object storage can be used for persistent volume claims (PVCs).

Dev Workflow Optimization

The S2I (Source-to-Image) system behind OpenShift builds app images directly from source code repositories. Developers specify a base builder image (e.g., Node.js, Python), and push configuration directly via CLI.

Execution flow:

- Repository is cloned.

- Runtime image executes build logic embedded in builder templates.

- Output container is registered in the local registry.

- A deployment configuration is generated and scaled to run the app.

No Dockerfile. No manual image tagging. This process reduces build cycle time and decreases reliance on DevOps tooling during early development.

Access Enforcement and Platform Security

Workload governance is enforced through a layered approach using:

- Security Context Constraints (SCCs): Define execution privileges and restrict capabilities. For example, denying escalation, running with non-root UID, and enforcing volume mount types.

- Authentication Providers: LDAP, Keystone, OAuth2, and custom tokens integrate with enterprise user directories to enforce single sign-on (SSO) and user role mapping.

- Policy Engines: Role bindings extend Kubernetes RBAC policies with enhancements such as project administrators and user-specific permissions using short-lived tokens.

- Cryptographic Validation: Registry auto-rejects unsigned or tampered images if enforcement is enabled, ensuring only approved builds are allowed in production.

Example: Running a pod as a non-root user is a default behavior, with SCC policies automatically denying privileged or host-access containers unless whitelisted.

Security Comparison:

Platform Operation Components

Operations teams gain real-time insights and automation through:

- Monitoring Stack: Prometheus scrapes metrics at the node and container levels. Grafana dashboards visualize trends in resource consumption, application availability, and API server performance.

- Centralized Logging: Fluentd agents forward structured log data into Elasticsearch clusters. Kibana dashboards present analysis layers for anomaly detection and query-based monitoring.

- Self-healing Automation: Kubernetes Operators manage complex workloads such as stateful services by encoding domain-specific logic into controllers.

- GUI Management: The web console delivers a fully featured interface for navigating resources, initiating deployments, scaling pods, and inspecting issues.

Operator Example:

Deploying this Operator sets up automatic backup schedules, version upgrades, and pod redeployments if failures are detected by probes or node health checks.

Ingress and External Exposure

HAProxy-based routers are pre-installed, enabling native route exposure on install. Custom subdomains and TLS termination are handled automatically. Routing logic supports weighted backends, sticky sessions based on cookies, and URI-based path routing.

Command to expose a backend service:

Automatically generates a publicly routable URL derived from cluster domain settings and maps it to the internal service port.

Internal Image Registry and Automation

Artifact storage is handled by a built-in OpenShift registry, reducing reliance on third-party container registries or public services. ImageStreams monitor tags and initiate triggers when updates are detected.

Image pull/push uses standard Docker semantics, but enhanced with webhook listeners and configurable automation.

Pulled image creates or updates an ImageStream. DeploymentConfigs referencing this stream will roll out if the image tag changes, driven by trigger hooks.

CI/CD Systems and Pipelines

Both legacy and cloud-native CI/CD capabilities are embedded. Jenkins capabilities are integrated via pod templates using Kubernetes Agents, while Tekton is used for modern pipelines using CRDs.

Example Tekton pipeline:

This configuration defines a pipeline for pulling source code, building a container image using Buildah, and then deploying to OpenShift using trusted credentials.

Enterprise-Level Support Options

Multiple deployment configurations exist to suit enterprise compliance or operational models.

- On-Prem Installation: Complete infrastructure control with full customization, often used by private data centers or air-gapped environments.

- Red Hat Managed Plans:

- OpenShift Dedicated: Hosted by Red Hat on public cloud but operated by their SRE team.

- ROSA (Red Hat OpenShift on AWS): Co-managed offering integrated into AWS billing and IAM.

- ARO (Azure Red Hat OpenShift): Joint support model with Microsoft Azure, including preconfigured networking and autoscaling.

Red Hat offers certified hardware support, SLA-bound bug fixing, security patching, and access to subscription repositories for certified third-party integrations like storage operators, databases, or service meshes.

OpenShift vs Kubernetes: Key Differences and Comparison

Architecture and Core Components

Kubernetes and OpenShift are both powerful platforms for container orchestration, but their internal architectures and the way they manage components differ significantly. Kubernetes is an open-source project maintained by the Cloud Native Computing Foundation (CNCF), while OpenShift is a commercial product developed by Red Hat that builds on Kubernetes and adds several layers of functionality and security.

Kubernetes provides a modular architecture with components like the kube-apiserver, kube-scheduler, kube-controller-manager, and etcd. These components work together to manage containerized applications across a cluster. Kubernetes is designed to be flexible and allows users to plug in their own networking, storage, and authentication solutions.

OpenShift, on the other hand, includes all the core Kubernetes components but adds its own set of tools and services. These include the OpenShift API server, integrated CI/CD pipelines, a built-in image registry, and enhanced role-based access control (RBAC). OpenShift also enforces stricter security policies out of the box, such as preventing containers from running as root.

Installation and Setup

The installation process for Kubernetes and OpenShift is one of the most noticeable differences between the two platforms. Kubernetes offers a variety of installation methods, including kubeadm, kops, and third-party tools like Rancher or Minikube. These methods provide flexibility but often require manual configuration of networking, storage, and security.

OpenShift simplifies the installation process through its installer, which automates much of the setup. OpenShift 4.x uses the OpenShift Installer (also known as the IPI - Installer-Provisioned Infrastructure) to deploy clusters on supported platforms like AWS, Azure, GCP, and bare metal. This installer handles provisioning, configuration, and bootstrapping of the cluster.

User Experience and Developer Tools

Kubernetes provides a command-line interface (kubectl) for interacting with the cluster. While powerful, kubectl has a steep learning curve for new users. Kubernetes does not include a graphical user interface (GUI) by default, though third-party dashboards can be added.

OpenShift enhances the developer experience by including a web-based console that allows users to manage resources, view logs, and monitor workloads visually. It also includes the oc CLI, which extends kubectl with additional commands specific to OpenShift. OpenShift’s developer tools include Source-to-Image (S2I), which allows developers to build container images directly from source code without writing Dockerfiles.

Security and Access Control

Security is a major area where OpenShift and Kubernetes diverge. Kubernetes provides basic RBAC and network policies, but it leaves much of the security configuration up to the administrator. This flexibility can be powerful but also risky if not configured correctly.

OpenShift enforces stricter security policies by default. For example, it prevents containers from running as root and uses Security Context Constraints (SCCs) to define what containers can and cannot do. OpenShift also integrates with enterprise authentication systems like LDAP, Active Directory, and OAuth out of the box.

Networking and Service Mesh

Kubernetes supports a wide range of networking plugins through the Container Network Interface (CNI). This allows users to choose from solutions like Calico, Flannel, and Weave. Kubernetes also supports service meshes like Istio, but these must be installed and configured separately.

OpenShift includes the OpenShift SDN by default but also supports other CNI plugins. OpenShift Service Mesh, based on Istio, is available as an add-on and is tightly integrated with the platform. This makes it easier to deploy and manage service meshes in OpenShift environments.

Monitoring and Logging

Monitoring and logging are essential for managing production workloads. Kubernetes does not include built-in monitoring or logging solutions. Users must integrate tools like Prometheus, Grafana, Fluentd, and Elasticsearch manually.

OpenShift includes monitoring and logging stacks out of the box. It provides Prometheus and Grafana for metrics, and Elasticsearch, Fluentd, and Kibana (EFK) for logging. These tools are pre-configured and integrated with the platform, reducing the setup time and complexity.

CI/CD Integration

Kubernetes does not include a native CI/CD pipeline. Users must integrate external tools like Jenkins, GitLab CI, or ArgoCD. This provides flexibility but requires additional configuration and maintenance.

OpenShift includes OpenShift Pipelines, a CI/CD solution based on Tekton. It also supports Jenkins and other tools, but the built-in pipelines provide a Kubernetes-native way to define and run CI/CD workflows. OpenShift Pipelines are integrated with the OpenShift console, making it easier for developers to manage builds and deployments.

Licensing and Support

Kubernetes is completely open-source and free to use. However, enterprise support must be obtained through third-party vendors like Google (GKE), Amazon (EKS), or Microsoft (AKS). These managed services offer support, SLAs, and additional features.

OpenShift is a commercial product. While there is an open-source version called OKD (Origin Community Distribution), most enterprises use Red Hat OpenShift, which requires a subscription. This subscription includes support, updates, and access to certified container images and operators.

Custom Resource Definitions (CRDs) and Operators

Kubernetes allows users to extend its functionality using Custom Resource Definitions (CRDs). This enables the creation of custom APIs and controllers to manage complex applications. Operators are a pattern built on CRDs that automate the lifecycle of applications.

OpenShift fully supports CRDs and Operators but takes it a step further with the OperatorHub, a curated marketplace of certified Operators. Red Hat also provides tools to build and manage Operators more easily, making OpenShift a more operator-friendly platform.

Command-Line Comparison

Below is a simple comparison of common commands in Kubernetes (kubectl) and OpenShift (oc):

While both tools are similar, oc includes additional commands tailored for OpenShift environments, such as oc new-app, which simplifies application deployment.

Summary Table: OpenShift vs Kubernetes

This detailed comparison reveals that while Kubernetes offers flexibility and a strong open-source foundation, OpenShift provides a more integrated and secure experience out of the box.

Advantages and Disadvantages of OpenShift and Kubernetes

Core Advantages of Kubernetes

Kubernetes, often abbreviated as K8s, is the go-to container orchestration platform for many developers and DevOps teams. Its open-source nature and strong community support make it a flexible and powerful tool. Below are the core advantages that make Kubernetes a popular choice:

- Vendor-Neutral and Open Source: Kubernetes is maintained by the Cloud Native Computing Foundation (CNCF), which ensures it remains vendor-agnostic. This allows users to deploy it on any cloud provider or on-premises infrastructure without being locked into a specific ecosystem.

- Large Community and Ecosystem: With thousands of contributors and a massive user base, Kubernetes benefits from rapid updates, extensive documentation, and a wide array of third-party tools and plugins.

- Scalability: Kubernetes is designed to scale horizontally. It can manage thousands of containers across clusters, making it ideal for large-scale applications.

- Custom Resource Definitions (CRDs): Kubernetes allows users to extend its capabilities through CRDs, enabling the creation of custom APIs and controllers tailored to specific use cases.

- Granular Control: Kubernetes provides fine-grained control over networking, storage, and compute resources. This is ideal for organizations that need to tweak every aspect of their deployment.

- Multi-Cloud and Hybrid Cloud Support: Kubernetes can run across multiple cloud providers or in hybrid environments, offering flexibility in deployment strategies.

- Declarative Configuration: Kubernetes uses YAML files for configuration, which allows for version control and reproducibility of deployments.

- Rolling Updates and Rollbacks: Kubernetes supports zero-downtime deployments with rolling updates and can roll back to previous versions if something goes wrong.

Core Disadvantages of Kubernetes

Despite its strengths, Kubernetes has its share of drawbacks, especially for teams without deep DevOps experience:

- Steep Learning Curve: Kubernetes has a complex architecture involving pods, services, deployments, replica sets, and more. New users often find it overwhelming.

- Manual Setup and Maintenance: Setting up a Kubernetes cluster from scratch requires significant effort and expertise. Maintenance tasks like upgrades and security patching are also manual unless automated with additional tools.

- Security Complexity: Kubernetes provides powerful security features, but configuring them correctly is not straightforward. Misconfigurations can lead to vulnerabilities.

- Resource Intensive: Running Kubernetes requires a considerable amount of system resources, especially for control plane components like etcd, kube-apiserver, and kube-controller-manager.

- Limited Built-in CI/CD: Kubernetes does not come with built-in continuous integration or delivery pipelines. Users must integrate third-party tools like Jenkins, ArgoCD, or Tekton.

- No Native Multi-Tenancy: Kubernetes lacks robust multi-tenancy features out of the box, making it harder to isolate workloads in shared environments.

Core Advantages of OpenShift

OpenShift, developed by Red Hat, is a Kubernetes distribution with added features aimed at enterprise users. It builds on Kubernetes and adds tools, security, and automation to make container orchestration more accessible and secure.

- Integrated Developer Tools: OpenShift includes a built-in web console, CLI tools, and IDE plugins that simplify development and deployment workflows.

- Enterprise-Grade Security: OpenShift enforces stricter security policies by default. For example, it runs containers with non-root users and includes built-in security context constraints (SCCs).

- Built-in CI/CD Pipelines: OpenShift includes OpenShift Pipelines (based on Tekton) and OpenShift GitOps (based on ArgoCD), offering native support for continuous integration and delivery.

- Automated Cluster Management: OpenShift provides automated installation, upgrades, and lifecycle management through tools like the OpenShift Installer and Cluster Version Operator.

- Red Hat Support: With a Red Hat subscription, users get enterprise-level support, certified container images, and access to Red Hat’s ecosystem of tools and services.

- Multi-Tenancy Support: OpenShift supports multi-tenancy through projects and role-based access control (RBAC), making it easier to isolate workloads and teams.

- Integrated Monitoring and Logging: OpenShift includes Prometheus, Grafana, and Elasticsearch for monitoring and logging out of the box.

- Operator Framework: OpenShift supports Kubernetes Operators and includes the OperatorHub, making it easier to deploy and manage complex applications.

Core Disadvantages of OpenShift

While OpenShift simplifies many aspects of Kubernetes, it also introduces its own limitations:

- Cost: OpenShift is not free. While there is a community edition (OKD), the enterprise version requires a Red Hat subscription, which can be expensive for small teams.

- Less Flexibility: OpenShift enforces stricter security and operational policies, which can limit customization. For example, running containers as root is not allowed by default.

- Complex Upgrades: Although OpenShift automates upgrades, the process can still be complex and may require downtime in certain scenarios.

- Resource Overhead: OpenShift includes many additional components that consume system resources, making it heavier than a vanilla Kubernetes setup.

- Learning Curve for OpenShift-Specific Tools: Teams familiar with Kubernetes may need to learn new tools and workflows specific to OpenShift, such as oc CLI and the OpenShift Console.

- Limited Community Edition: OKD, the open-source version of OpenShift, lags behind the enterprise version in features and support, making it less appealing for production use.

Feature Comparison Table

Kubernetes vs OpenShift: Security Feature Comparison

When Kubernetes Shines

Kubernetes is a strong fit for teams that:

- Have experienced DevOps engineers.

- Need full control over their infrastructure.

- Want to avoid vendor lock-in.

- Are building custom CI/CD pipelines.

- Prefer open-source tools and community support.

- Are deploying across multiple cloud providers.

Example:

This YAML file shows how Kubernetes allows you to define a deployment with full control over replicas, selectors, and container specs.

When OpenShift Excels

OpenShift is ideal for organizations that:

- Require enterprise-grade support and SLAs.

- Want built-in CI/CD and GitOps capabilities.

- Need stricter security and compliance features.

- Prefer a user-friendly web interface.

- Are deploying in regulated industries (finance, healthcare).

- Want automated upgrades and lifecycle management.

Example OpenShift Route:

This example shows how OpenShift simplifies exposing services with HTTPS using Routes, which are not natively available in Kubernetes.

Summary Table: Pros and Cons

Key Takeaways in List Format

Kubernetes Pros:

- Free and open-source.

- Highly customizable.

- Strong community support.

- Works across any infrastructure.

Kubernetes Cons:

- Complex to set up and manage.

- Requires third-party tools for CI/CD.

- Security must be manually configured.

OpenShift Pros:

- Enterprise-ready with support.

- Built-in developer tools and pipelines.

- Strong security defaults.

- Easier cluster management.

OpenShift Cons:

- Paid subscription required.

- Less flexibility for custom configurations.

- Higher resource usage.

Use Cases: When to Choose OpenShift vs. Kubernetes

Enterprise-Grade Use Cases: When OpenShift Shines

Red Hat OpenShift is built on top of Kubernetes but includes a suite of additional tools and services that are pre-integrated and supported. This makes it especially suitable for enterprises that need a full-stack solution with built-in security, compliance, and developer tools. Below are specific scenarios where OpenShift is the better choice.

1. Regulated Industries (Finance, Healthcare, Government)

Organizations in highly regulated sectors often face strict compliance requirements such as HIPAA, PCI-DSS, or FedRAMP. OpenShift includes built-in security policies, role-based access control (RBAC), and audit logging features that help meet these standards out of the box.

Why OpenShift Wins:

- Integrated Security: Security Context Constraints (SCCs) are enforced by default.

- Audit Trails: Built-in logging and monitoring tools help with compliance.

- FIPS-validated Components: OpenShift supports FIPS 140-2 validated cryptographic modules.

Example:

A healthcare provider deploying patient data applications can use OpenShift’s built-in compliance features to meet HIPAA requirements without needing to manually configure Kubernetes security policies.

2. Enterprises Needing Full DevOps Toolchain

OpenShift includes a complete CI/CD pipeline system using Jenkins, Tekton, and ArgoCD integrations. This is ideal for enterprises that want a ready-to-use DevOps environment without assembling tools manually.

Why OpenShift Wins:

- Source-to-Image (S2I): Automatically builds container images from source code.

- Integrated Pipelines: Tekton pipelines are natively supported.

- Developer Portals: OpenShift Developer Console provides a UI for managing builds and deployments.

Example:

A software company with multiple development teams can use OpenShift to standardize CI/CD workflows across teams, reducing setup time and increasing deployment velocity.

3. Organizations Requiring Multi-Tenancy and Governance

OpenShift provides strong multi-tenancy support with project-level isolation, quotas, and governance policies. This is critical for large organizations with multiple teams or departments sharing the same cluster.

Why OpenShift Wins:

- Project Isolation: Each team can have its own namespace with resource limits.

- Quota Management: Admins can enforce CPU, memory, and storage quotas.

- Policy Enforcement: OpenShift Gatekeeper and OPA integration for policy-as-code.

Example:

A university IT department managing applications for different faculties can use OpenShift to isolate workloads and enforce resource limits per department.

4. Enterprises Demanding Commercial Support

OpenShift comes with Red Hat’s enterprise-grade support, including SLAs, security patches, and long-term maintenance. This is essential for mission-critical applications.

Why OpenShift Wins:

- 24/7 Support: Red Hat provides global support with guaranteed response times.

- Certified Ecosystem: OpenShift supports certified operators and integrations.

- Lifecycle Management: Red Hat handles version upgrades and patching.

Example:

A bank running critical financial applications can rely on Red Hat’s support to ensure uptime and security compliance.

Cloud-Native and Custom Use Cases: When Kubernetes Excels

Kubernetes is a flexible, open-source container orchestration platform that offers complete control and customization. It’s ideal for teams with strong DevOps skills who want to build their own platform or need to run lightweight, cloud-native workloads.

1. Startups and Small Teams with DevOps Expertise

Kubernetes is a great fit for small teams that want to avoid vendor lock-in and have the technical skills to manage infrastructure.

Why Kubernetes Wins:

- Cost-Effective: No licensing fees.

- Customizable: Choose your own ingress controllers, monitoring tools, and storage solutions.

- Lightweight: Deploy only what you need.

Example:

A startup building a SaaS product can use Kubernetes on a cloud provider like GKE or EKS to minimize costs and retain full control over their stack.

2. Edge Computing and Lightweight Deployments

Kubernetes can be deployed on lightweight environments like Raspberry Pi clusters or edge devices using distributions like K3s or MicroK8s.

Why Kubernetes Wins:

- Minimal Footprint: K3s is optimized for low-resource environments.

- Offline Capabilities: Can run in disconnected or air-gapped environments.

- Custom Networking: Tailor networking to suit edge use cases.

Example:

A logistics company deploying tracking software on delivery trucks can use K3s to run Kubernetes workloads at the edge with minimal overhead.

3. Hybrid and Multi-Cloud Strategies

Kubernetes supports a wide range of cloud providers and on-premise environments, making it ideal for hybrid or multi-cloud deployments.

Why Kubernetes Wins:

- Cloud Agnostic: Run on AWS, Azure, GCP, or on-prem.

- Custom Integrations: Use any storage, networking, or monitoring solution.

- Federation: Manage multiple clusters across regions or clouds.

Example:

A global enterprise with data centers in multiple countries can use Kubernetes to deploy applications consistently across AWS and on-premise infrastructure.

4. Research and Experimental Projects

Kubernetes is ideal for academic or experimental projects where flexibility and customization are more important than enterprise support.

Why Kubernetes Wins:

- Open Source: Full access to source code and community support.

- Rapid Prototyping: Easy to spin up clusters for testing.

- Modular Architecture: Swap out components like container runtimes or schedulers.

Example:

A university research lab experimenting with AI models can use Kubernetes to deploy GPU workloads and test different configurations without vendor constraints.

Feature Comparison Table: OpenShift vs Kubernetes Use Cases

Code Snippet: OpenShift S2I vs Kubernetes Manual Build

OpenShift (Source-to-Image):

This single command pulls the source code, builds a container image, and deploys it.

Kubernetes (Manual Build and Deploy):

In Kubernetes, you need to manage the build, push, and deployment steps separately.

Decision Matrix: When to Choose OpenShift or Kubernetes

Real-World Use Case Mapping

Summary Table: Use Case Fitment

Conclusion: Which is Better for Your Business?

Expense Considerations and Licensing Models

Kubernetes, as a community-driven project, carries no direct licensing charges. It can be deployed across public clouds, private data centers, or edge environments without incurring usage fees. However, managing and operating Kubernetes clusters demands a qualified DevOps team familiar with installation, upgrades, monitoring, and security configurations. Additional expenses arise from assembling an ecosystem of third-party tools for monitoring, CI/CD, and access control.

OpenShift, in contrast, is a commercial distribution by Red Hat. Its licensing includes enterprise-level assistance, frequent security patches, and a suite of integrated features such as container image management and developer dashboards. The initial cost may be higher, but for teams lacking deep Kubernetes expertise or seeking faster delivery cycles, OpenShift can reduce total cost of ownership over time.

Developer Enablement and Tooling

Kubernetes prioritizes infrastructure control, leaving developer tools largely to administrators. Teams must create and manage Dockerfiles, Helm charts, and YAML manifests themselves. CI/CD functionality, if needed, requires external integrations with systems such as Jenkins or GitLab.

OpenShift embeds developer services directly within the platform. It ships with a graphical web console, automatic image builds using Source-to-Image (S2I), and integrated Tekton pipelines for automation. This setup allows developers to deploy with minimal concern for underlying orchestration tasks.

Security Controls and Enterprise Compliance

Bare Kubernetes allows comprehensive control over cluster security, but it offers few defaults. Administrators must manually define RBAC policies, configure pod security context, apply network segmentation rules, and handle TLS certificates.

OpenShift applies mandatory security constraints automatically. It runs containers without root privileges, enforces stricter pod security policies, and integrates with identity providers like LDAP and OAuth out-of-the-box. Image scanning, admission controls, and audit logs are embedded within the platform.

Surrounding Ecosystem and Tools Integration

Kubernetes thrives through its extensible ecosystem. Operators can customize nearly every component by choosing among diverse logging stacks, monitoring dashboards, service discovery tools, ingress controllers, and backup systems.

OpenShift includes many of these elements out-of-the-box. It bundles Prometheus for telemetry, Fluentd with Elasticsearch and Kibana for log management, and a supported Istio-based service mesh. The tight integration makes version compatibility easier to maintain, though advanced users may find the system rigid.

Fit for Multi-Cloud and Hybrid Strategies

Kubernetes was architected for provider neutrality. It seamlessly supports AWS, Azure, GCP, or bare metal—making it ideal for organizations seeking to run container workloads across multiple infrastructures or regions.

OpenShift supports hybrid consistency via the Red Hat OpenShift Container Platform, with options for on-premise installations and cloud images for major providers. However, its tight coupling with Red Hat tools and support agreements may limit the flexibility to adopt third-party integrations.

Resource Management and Team Readiness

Kubernetes assumes operational maturity. It best suits environments with in-house platform teams capable of managing cluster provisioning, monitoring, upgrades, and security hardening. The learning curve is steep, and the margin for error is narrow.

OpenShift, by comparison, abstracts away many of these responsibilities. Through built-in operators and automated workflows, it allows teams with limited Kubernetes experience to safely run container workloads with confidence.

Platform-Use Mapping for Organizations

Kubernetes effectively serves startups, technology firms, and infrastructure-focused teams skilled in open-source technologies. It allows micro-tuning of every layer but demands technical depth.

OpenShift caters well to enterprises with governance requirements, industry audits, or minimal internal DevOps support. Its curated experience and vendor-led support improve stability and reduce rollout times.

Example: Deploying a Basic Web Service

Kubernetes Workload Manifest

OpenShift Workload Definition

In this example, OpenShift extends configuration flexibility using DeploymentConfig with automated build and release triggers. Kubernetes relies on base Deployment configurations, requiring more external inputs to achieve a similar outcome.

Direct Feature Contrast

OpenShift vs Kubernetes: FAQ

What is the main difference between Kubernetes and OpenShift?

Kubernetes is an open-source container orchestration platform originally developed by Google. It provides the core functionality to deploy, scale, and manage containerized applications. OpenShift, developed by Red Hat, is a Kubernetes distribution that includes additional tools, security features, and a developer-friendly interface.

Is OpenShift just Kubernetes with a UI?

No. While OpenShift includes a user-friendly web console, it also adds enterprise-grade features such as integrated CI/CD pipelines, stricter security defaults, and built-in monitoring tools. OpenShift is a full platform-as-a-service (PaaS) solution, whereas Kubernetes is a container orchestration engine. OpenShift includes Kubernetes but extends it with additional tools and policies.

Can I run Kubernetes and OpenShift together?

Technically, yes. Since OpenShift is built on Kubernetes, they share the same core architecture. However, running them side-by-side in the same environment is uncommon and may lead to conflicts in resource management, security policies, and networking configurations. It’s more practical to choose one based on your operational and business needs.

Which is easier to install: Kubernetes or OpenShift?

OpenShift provides a more streamlined installation process, especially for enterprise environments. Red Hat offers an installer-provisioned infrastructure (IPI) method that automates much of the setup. Kubernetes, on the other hand, offers more flexibility but requires manual configuration or third-party tools like kubeadm, kops, or Rancher.

Do I need to pay for OpenShift?

OpenShift has both free and paid versions. OpenShift Origin (OKD) is the open-source upstream version of OpenShift and is free to use. However, Red Hat OpenShift includes enterprise support, certified container images, and additional tools, which require a subscription.

Kubernetes itself is free and open-source. However, enterprise support or managed services like Google Kubernetes Engine (GKE), Amazon EKS, or Azure AKS may incur costs.

How does security differ between Kubernetes and OpenShift?

OpenShift enforces stricter security policies out of the box. For example, it uses Security Context Constraints (SCCs) to control permissions for pods, whereas Kubernetes relies on PodSecurityPolicies (PSPs), which are deprecated in newer versions.

OpenShift also restricts running containers as root by default, while Kubernetes allows it unless explicitly restricted. This makes OpenShift more secure by default but can also limit flexibility for developers.

Can I use Helm charts in OpenShift?

Yes, OpenShift supports Helm charts. Helm is a package manager for Kubernetes that simplifies application deployment. OpenShift includes a Helm CLI plugin and supports Helm 3, allowing users to deploy applications using charts just like in Kubernetes.

However, due to OpenShift’s stricter security policies, some Helm charts may require modification to comply with OpenShift’s default constraints.

What are the networking differences between Kubernetes and OpenShift?

Kubernetes uses a flat network model where every pod gets its own IP address. It supports multiple CNI (Container Network Interface) plugins like Calico, Flannel, and Weave.

OpenShift uses Open vSwitch (OVS) and supports Software Defined Networking (SDN) out of the box. It also includes built-in support for ingress and egress network policies, making it easier to manage traffic flow.

Is OpenShift more secure than Kubernetes?

Out of the box, yes. OpenShift enforces stricter security policies, includes built-in authentication and authorization, and restricts container privileges. Kubernetes can be made equally secure, but it requires manual configuration and third-party tools.

Security in Kubernetes is more modular, allowing for flexibility but also increasing the risk of misconfiguration. OpenShift’s opinionated defaults reduce this risk.

What are the CI/CD capabilities in Kubernetes vs OpenShift?

Kubernetes does not include native CI/CD tools. You need to integrate third-party solutions like Jenkins, GitLab CI, or ArgoCD.

OpenShift includes built-in CI/CD pipelines using Jenkins and Tekton. It also provides a developer console to manage builds, deployments, and image streams.

Can I migrate from Kubernetes to OpenShift?

Yes, migration is possible but requires careful planning. Since OpenShift is built on Kubernetes, most workloads are compatible. However, differences in security policies, networking, and resource quotas may require adjustments.

Steps for migration:

- Audit current Kubernetes workloads.

- Review OpenShift security constraints.

- Modify deployment manifests if needed.

- Test in a staging OpenShift environment.

- Migrate data and persistent volumes.

- Deploy to production.

Is OpenShift only for Red Hat Linux?

No. While Red Hat Enterprise Linux (RHEL) is the preferred OS, OpenShift also supports other Linux distributions like CentOS, Fedora, and even some cloud-native OSes like CoreOS. However, for enterprise support, Red Hat recommends using RHEL or Red Hat CoreOS.

How do updates and upgrades work in Kubernetes vs OpenShift?

Kubernetes upgrades are manual unless using a managed service like GKE or EKS. You need to upgrade the control plane and worker nodes separately.

OpenShift provides a more automated upgrade process with built-in tools and version compatibility checks. Red Hat also provides tested upgrade paths and rollback options.

Can I use OpenShift on public cloud?

Yes. OpenShift is available as a managed service on major cloud providers:

- AWS: Red Hat OpenShift Service on AWS (ROSA)

- Azure: Azure Red Hat OpenShift (ARO)

- IBM Cloud: Red Hat OpenShift on IBM Cloud

- Google Cloud: Self-managed or via Anthos

These services offer the benefits of OpenShift with the scalability and flexibility of cloud infrastructure.

What programming languages are supported in OpenShift and Kubernetes?

Both platforms are language-agnostic. You can deploy applications written in any language as long as they are containerized. OpenShift provides additional support for source-to-image (S2I) builds, which can automatically create containers from source code in languages like Java, Python, Node.js, and Ruby.

How do I monitor applications in Kubernetes vs OpenShift?

Kubernetes requires integration with tools like Prometheus, Grafana, and ELK stack for monitoring and logging.

OpenShift includes built-in monitoring with Prometheus and Grafana, as well as centralized logging with Elasticsearch, Fluentd, and Kibana (EFK stack). These tools are pre-configured and integrated into the OpenShift console.

How do I manage secrets in Kubernetes and OpenShift?

Both platforms support Kubernetes Secrets, which store sensitive data like passwords, tokens, and keys. OpenShift enhances this with tighter access controls and integration with enterprise secret management tools like HashiCorp Vault and CyberArk.

Is OpenShift suitable for small teams?

OpenShift can be heavy for small teams due to its resource requirements and complexity. However, OKD (the open-source version) is a good option for smaller environments. Kubernetes may be more lightweight and flexible for startups or small development teams.

Can I use Docker with Kubernetes and OpenShift?

Kubernetes deprecated Docker as a container runtime in version 1.20 in favor of containerd and CRI-O. OpenShift uses CRI-O by default. You can still build Docker images and run them, but the underlying runtime is different.

How do I secure APIs in Kubernetes and OpenShift?

Both platforms support securing APIs using:

- TLS encryption

- Role-Based Access Control (RBAC)

- Network policies

- API gateways (e.g., Istio, Kong)

OpenShift includes built-in OAuth and stricter RBAC policies, making it easier to secure APIs out of the box.

How can I detect API vulnerabilities in Kubernetes and OpenShift?

Traditional security tools often miss API-specific threats. For a more advanced and automated approach, consider using Wallarm API Attack Surface Management (AASM). This agentless solution is designed to:

- Discover external hosts and their APIs

- Identify missing WAF/WAAP protections

- Detect API vulnerabilities

- Mitigate API data leaks

Wallarm AASM integrates seamlessly with both Kubernetes and OpenShift environments, offering real-time visibility into your API ecosystem. It’s especially useful for DevSecOps teams looking to secure complex microservices architectures.

👉 Try Wallarm AASM for free at https://www.wallarm.com/product/aasm-sign-up?internal_utm_source=whats and start protecting your APIs today.

FAQ

Subscribe for the latest news

.jpeg)